| Age | Commit message (Collapse) | Author |

|---|

|

Summary:

This feature has been around for a couple of years and users haven't reported any problems with it.

Not quite related: fixed a technical ODR violation in public header for info_log_level in case DEBUG build status changes.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/12377

Test Plan: unit tests updated, already in crash test. Some unit tests are expecting specific behaviors of optimize_filters_for_memory=false and we now need to bake that in.

Reviewed By: jowlyzhang

Differential Revision: D54129517

Pulled By: pdillinger

fbshipit-source-id: a64b614840eadd18b892624187b3e122bab6719c

|

|

Summary:

ScopedArenaIterator is not an iterator. It is a pointer wrapper. And we don't need a custom implemented pointer wrapper when std::unique_ptr can be instantiated with what we want.

So this adds ScopedArenaPtr<T> to replace those uses.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/12470

Test Plan: CI (including ASAN/UBSAN)

Reviewed By: jowlyzhang

Differential Revision: D55254362

Pulled By: pdillinger

fbshipit-source-id: cc96a0b9840df99aa807f417725e120802c0ae18

|

|

Summary:

Fix compatibility with transparent huge pages by allocating in increments (1MiB) smaller than the

typical smallest huge page size of 2MiB.

Also, bypass the test when jemalloc config.fill is used, which means the allocator is explicitly

configured to write to memory before we get it, which is not what this test expects.

Fixes https://github.com/facebook/rocksdb/issues/12351

Pull Request resolved: https://github.com/facebook/rocksdb/pull/12378

Test Plan:

```

sudo bash -c 'echo "always" > /sys/kernel/mm/transparent_hugepage/enabled'

```

And see unit test fails before this change, passes after this change

Also tested internal buck build with dbg mode (previously failing).

Reviewed By: jaykorean, hx235

Differential Revision: D54139634

Pulled By: pdillinger

fbshipit-source-id: 179accebe918d8eecd46a979fcf21d356f9b5519

|

|

Summary:

The following are risks associated with pointer-to-pointer reinterpret_cast:

* Can produce the "wrong result" (crash or memory corruption). IIRC, in theory this can happen for any up-cast or down-cast for a non-standard-layout type, though in practice would only happen for multiple inheritance cases (where the base class pointer might be "inside" the derived object). We don't use multiple inheritance a lot, but we do.

* Can mask useful compiler errors upon code change, including converting between unrelated pointer types that you are expecting to be related, and converting between pointer and scalar types unintentionally.

I can only think of some obscure cases where static_cast could be troublesome when it compiles as a replacement:

* Going through `void*` could plausibly cause unnecessary or broken pointer arithmetic. Suppose we have

`struct Derived: public Base1, public Base2`. If we have `Derived*` -> `void*` -> `Base2*` -> `Derived*` through reinterpret casts, this could plausibly work (though technical UB) assuming the `Base2*` is not dereferenced. Changing to static cast could introduce breaking pointer arithmetic.

* Unnecessary (but safe) pointer arithmetic could arise in a case like `Derived*` -> `Base2*` -> `Derived*` where before the Base2 pointer might not have been dereferenced. This could potentially affect performance.

With some light scripting, I tried replacing pointer-to-pointer reinterpret_casts with static_cast and kept the cases that still compile. Most occurrences of reinterpret_cast have successfully been changed (except for java/ and third-party/). 294 changed, 257 remain.

A couple of related interventions included here:

* Previously Cache::Handle was not actually derived from in the implementations and just used as a `void*` stand-in with reinterpret_cast. Now there is a relationship to allow static_cast. In theory, this could introduce pointer arithmetic (as described above) but is unlikely without multiple inheritance AND non-empty Cache::Handle.

* Remove some unnecessary casts to void* as this is allowed to be implicit (for better or worse).

Most of the remaining reinterpret_casts are for converting to/from raw bytes of objects. We could consider better idioms for these patterns in follow-up work.

I wish there were a way to implement a template variant of static_cast that would only compile if no pointer arithmetic is generated, but best I can tell, this is not possible. AFAIK the best you could do is a dynamic check that the void* conversion after the static cast is unchanged.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/12308

Test Plan: existing tests, CI

Reviewed By: ltamasi

Differential Revision: D53204947

Pulled By: pdillinger

fbshipit-source-id: 9de23e618263b0d5b9820f4e15966876888a16e2

|

|

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/12114

Reviewed By: jowlyzhang

Differential Revision: D51745613

Pulled By: ajkr

fbshipit-source-id: 27ca4bda275cab057d3a3ec99f0f92cdb9be5177

|

|

Summary:

* Add some options to cache_bench to use JemallocNodumpAllocator

* Make num_shard_bits option use and report cache-specific defaults

* Add a usleep option to sleep between operations, for simulating a workload with more CPU idle/wait time.

* Use const& for JemallocAllocatorOptions, to improve API usability (e.g. can bind to temporary `{}`)

* InstallStackTraceHandler()

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11758

Test Plan: manual

Reviewed By: jowlyzhang

Differential Revision: D48668479

Pulled By: pdillinger

fbshipit-source-id: b6032fbe09444cdb8f1443a5e017d2eea4f6205a

|

|

Summary:

* JemallocNodumpAllocator was passing a size_t to FastRange32, which could cause compilation errors or warnings (seen with clang)

* Fixed the order of arguments to match what would be used with modulo operator (%), for clarity.

Fixes https://github.com/facebook/rocksdb/issues/11006

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11707

Test Plan: no functional change, existing tests

Reviewed By: ajkr

Differential Revision: D48435149

Pulled By: pdillinger

fbshipit-source-id: e6e8b107ded4eceda37db20df59985c846a2546b

|

|

Summary:

More code leading up to dynamic HCC.

* Small enhancements to cache_bench

* Extra assertion in Unref

* Improve a CAS loop in ChargeUsageMaybeEvictStrict

* Put load factor constants in appropriate class

* Move `standalone` field to HyperClockTable::HandleImpl because it can be encoded differently in the upcoming dynamic HCC.

* Add a typed version of MemMapping to simplify some future code.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11670

Test Plan: existing tests, unit test added for TypedMemMapping

Reviewed By: jowlyzhang

Differential Revision: D48056464

Pulled By: pdillinger

fbshipit-source-id: 186b7d3105c5d6d2eb6a592369bc10a97ee14a15

|

|

Summary:

In IDE navigation I find it annoying that there are two statistics.h files (etc.) and often land on the wrong one. Here I migrate several headers to use the blah.h <- blah_impl.h <- blah.cc idiom. Although clang-format wants "blah.h" to be the top include for "blah.cc", I think overall this is an improvement.

No public API changes.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11408

Test Plan: existing tests

Reviewed By: ltamasi

Differential Revision: D45456696

Pulled By: pdillinger

fbshipit-source-id: 809d931253f3272c908cf5facf7e1d32fc507373

|

|

Summary:

RocksDB's jemalloc no-dump allocator (`NewJemallocNodumpAllocator()`) was using a single manual arena. This arena's lock contention could be very high when thread caching is disabled for RocksDB blocks (e.g., when using `MALLOC_CONF='tcache_max:4096'` and `rocksdb_block_size=16384`).

This PR changes the jemalloc no-dump allocator to use a configurable number of manual arenas. That number is required to be a power of two so we can avoid division. The allocator shards allocation requests randomly across those manual arenas.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11400

Test Plan:

- mysqld setup

- Branch: fb-mysql-8.0.28 (https://github.com/facebook/mysql-5.6/commit/653eba2e56cfba4eac0c851ac9a70b2da9607527)

- Build: `mysqlbuild.sh --clean --release`

- Set env var `MALLOC_CONF='tcache_max:$tcache_max'`

- Added CLI args `--rocksdb_cache_dump=false --rocksdb_block_cache_size=4294967296 --rocksdb_block_size=16384`

- Ran under /usr/bin/time

- Large database scenario

- Setup command: `mysqlslap -h 127.0.0.1 -P 13020 --auto-generate-sql=1 --auto-generate-sql-load-type=write --auto-generate-sql-guid-primary=1 --number-char-cols=8 --auto-generate-sql-execute-number=262144 --concurrency=32 --no-drop`

- Benchmark command: `mysqlslap -h 127.0.0.1 -P 13020 --query='select count(*) from mysqlslap.t1;' --number-of-queries=320 --concurrency=32`

- Results:

| tcache_max | num_arenas | Peak RSS MB (% change) | Query latency seconds (% change) |

|---|---|---|---|

| 4096 | **(baseline)** | 4541 | 37.1 |

| 4096 | 1 | 4535 (-0.1%) | 36.7 (-1%) |

| 4096 | 8 | 4687 (+3%) | 10.2 (-73%) |

| 16384 | **(baseline)** | 4514 | 8.4 |

| 16384 | 1 | 4526 (+0.3%) | 8.5 (+1%) |

| 16384 | 8 | 4580 (+1%) | 8.5 (+1%) |

Reviewed By: pdillinger

Differential Revision: D45220794

Pulled By: ajkr

fbshipit-source-id: 9a50c9872bdef5d299e52b115a65ee8a5557d58d

|

|

Summary:

We haven't been actively mantaining RocksDB LITE recently and the size must have been gone up significantly. We are removing the support.

Most of changes were done through following comments:

unifdef -m -UROCKSDB_LITE `git grep -l ROCKSDB_LITE | egrep '[.](cc|h)'`

by Peter Dillinger. Others changes were manually applied to build scripts, CircleCI manifests, ROCKSDB_LITE is used in an expression and file db_stress_test_base.cc.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11147

Test Plan: See CI

Reviewed By: pdillinger

Differential Revision: D42796341

fbshipit-source-id: 4920e15fc2060c2cd2221330a6d0e5e65d4b7fe2

|

|

Summary:

This is several refactorings bundled into one to avoid having to incrementally re-modify uses of Cache several times. Overall, there are breaking changes to Cache class, and it becomes more of low-level interface for implementing caches, especially block cache. New internal APIs make using Cache cleaner than before, and more insulated from block cache evolution. Hopefully, this is the last really big block cache refactoring, because of rather effectively decoupling the implementations from the uses. This change also removes the EXPERIMENTAL designation on the SecondaryCache support in Cache. It seems reasonably mature at this point but still subject to change/evolution (as I warn in the API docs for Cache).

The high-level motivation for this refactoring is to minimize code duplication / compounding complexity in adding SecondaryCache support to HyperClockCache (in a later PR). Other benefits listed below.

* static_cast lines of code +29 -35 (net removed 6)

* reinterpret_cast lines of code +6 -32 (net removed 26)

## cache.h and secondary_cache.h

* Always use CacheItemHelper with entries instead of just a Deleter. There are several motivations / justifications:

* Simpler for implementations to deal with just one Insert and one Lookup.

* Simpler and more efficient implementation because we don't have to track which entries are using helpers and which are using deleters

* Gets rid of hack to classify cache entries by their deleter. Instead, the CacheItemHelper includes a CacheEntryRole. This simplifies a lot of code (cache_entry_roles.h almost eliminated). Fixes https://github.com/facebook/rocksdb/issues/9428.

* Makes it trivial to adjust SecondaryCache behavior based on kind of block (e.g. don't re-compress filter blocks).

* It is arguably less convenient for many direct users of Cache, but direct users of Cache are now rare with introduction of typed_cache.h (below).

* I considered and rejected an alternative approach in which we reduce customizability by assuming each secondary cache compatible value starts with a Slice referencing the uncompressed block contents (already true or mostly true), but we apparently intend to stack secondary caches. Saving an entry from a compressed secondary to a lower tier requires custom handling offered by SaveToCallback, etc.

* Make CreateCallback part of the helper and introduce CreateContext to work with it (alternative to https://github.com/facebook/rocksdb/issues/10562). This cleans up the interface while still allowing context to be provided for loading/parsing values into primary cache. This model works for async lookup in BlockBasedTable reader (reader owns a CreateContext) under the assumption that it always waits on secondary cache operations to finish. (Otherwise, the CreateContext could be destroyed while async operation depending on it continues.) This likely contributes most to the observed performance improvement because it saves an std::function backed by a heap allocation.

* Use char* for serialized data, e.g. in SaveToCallback, where void* was confusingly used. (We use `char*` for serialized byte data all over RocksDB, with many advantages over `void*`. `memcpy` etc. are legacy APIs that should not be mimicked.)

* Add a type alias Cache::ObjectPtr = void*, so that we can better indicate the intent of the void* when it is to be the object associated with a Cache entry. Related: started (but did not complete) a refactoring to move away from "value" of a cache entry toward "object" or "obj". (It is confusing to call Cache a key-value store (like DB) when it is really storing arbitrary in-memory objects, not byte strings.)

* Remove unnecessary key param from DeleterFn. This is good for efficiency in HyperClockCache, which does not directly store the cache key in memory. (Alternative to https://github.com/facebook/rocksdb/issues/10774)

* Add allocator to Cache DeleterFn. This is a kind of future-proofing change in case we get more serious about using the Cache allocator for memory tracked by the Cache. Right now, only the uncompressed block contents are allocated using the allocator, and a pointer to that allocator is saved as part of the cached object so that the deleter can use it. (See CacheAllocationPtr.) If in the future we are able to "flatten out" our Cache objects some more, it would be good not to have to track the allocator as part of each object.

* Removes legacy `ApplyToAllCacheEntries` and changes `ApplyToAllEntries` signature for Deleter->CacheItemHelper change.

## typed_cache.h

Adds various "typed" interfaces to the Cache as internal APIs, so that most uses of Cache can use simple type safe code without casting and without explicit deleters, etc. Almost all of the non-test, non-glue code uses of Cache have been migrated. (Follow-up work: CompressedSecondaryCache deserves deeper attention to migrate.) This change expands RocksDB's internal usage of metaprogramming and SFINAE (https://en.cppreference.com/w/cpp/language/sfinae).

The existing usages of Cache are divided up at a high level into these new interfaces. See updated existing uses of Cache for examples of how these are used.

* PlaceholderCacheInterface - Used for making cache reservations, with entries that have a charge but no value.

* BasicTypedCacheInterface<TValue> - Used for primary cache storage of objects of type TValue, which can be cleaned up with std::default_delete<TValue>. The role is provided by TValue::kCacheEntryRole or given in an optional template parameter.

* FullTypedCacheInterface<TValue, TCreateContext> - Used for secondary cache compatible storage of objects of type TValue. In addition to BasicTypedCacheInterface constraints, we require TValue::ContentSlice() to return persistable data. This simplifies usage for the normal case of simple secondary cache compatibility (can give you a Slice to the data already in memory). In addition to TCreateContext performing the role of Cache::CreateContext, it is also expected to provide a factory function for creating TValue.

* For each of these, there's a "Shared" version (e.g. FullTypedSharedCacheInterface) that holds a shared_ptr to the Cache, rather than assuming external ownership by holding only a raw `Cache*`.

These interfaces introduce specific handle types for each interface instantiation, so that it's easy to see what kind of object is controlled by a handle. (Ultimately, this might not be worth the extra complexity, but it seems OK so far.)

Note: I attempted to make the cache 'charge' automatically inferred from the cache object type, such as by expecting an ApproximateMemoryUsage() function, but this is not so clean because there are cases where we need to compute the charge ahead of time and don't want to re-compute it.

## block_cache.h

This header is essentially the replacement for the old block_like_traits.h. It includes various things to support block cache access with typed_cache.h for block-based table.

## block_based_table_reader.cc

Before this change, accessing the block cache here was an awkward mix of static polymorphism (template TBlocklike) and switch-case on a dynamic BlockType value. This change mostly unifies on static polymorphism, relying on minor hacks in block_cache.h to distinguish variants of Block. We still check BlockType in some places (especially for stats, which could be improved in follow-up work) but at least the BlockType is a static constant from the template parameter. (No more awkward partial redundancy between static and dynamic info.) This likely contributes to the overall performance improvement, but hasn't been tested in isolation.

The other key source of simplification here is a more unified system of creating block cache objects: for directly populating from primary cache and for promotion from secondary cache. Both use BlockCreateContext, for context and for factory functions.

## block_based_table_builder.cc, cache_dump_load_impl.cc

Before this change, warming caches was super ugly code. Both of these source files had switch statements to basically transition from the dynamic BlockType world to the static TBlocklike world. None of that mess is needed anymore as there's a new, untyped WarmInCache function that handles all the details just as promotion from SecondaryCache would. (Fixes `TODO akanksha: Dedup below code` in block_based_table_builder.cc.)

## Everything else

Mostly just updating Cache users to use new typed APIs when reasonably possible, or changed Cache APIs when not.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10975

Test Plan:

tests updated

Performance test setup similar to https://github.com/facebook/rocksdb/issues/10626 (by cache size, LRUCache when not "hyper" for HyperClockCache):

34MB 1thread base.hyper -> kops/s: 0.745 io_bytes/op: 2.52504e+06 miss_ratio: 0.140906 max_rss_mb: 76.4844

34MB 1thread new.hyper -> kops/s: 0.751 io_bytes/op: 2.5123e+06 miss_ratio: 0.140161 max_rss_mb: 79.3594

34MB 1thread base -> kops/s: 0.254 io_bytes/op: 1.36073e+07 miss_ratio: 0.918818 max_rss_mb: 45.9297

34MB 1thread new -> kops/s: 0.252 io_bytes/op: 1.36157e+07 miss_ratio: 0.918999 max_rss_mb: 44.1523

34MB 32thread base.hyper -> kops/s: 7.272 io_bytes/op: 2.88323e+06 miss_ratio: 0.162532 max_rss_mb: 516.602

34MB 32thread new.hyper -> kops/s: 7.214 io_bytes/op: 2.99046e+06 miss_ratio: 0.168818 max_rss_mb: 518.293

34MB 32thread base -> kops/s: 3.528 io_bytes/op: 1.35722e+07 miss_ratio: 0.914691 max_rss_mb: 264.926

34MB 32thread new -> kops/s: 3.604 io_bytes/op: 1.35744e+07 miss_ratio: 0.915054 max_rss_mb: 264.488

233MB 1thread base.hyper -> kops/s: 53.909 io_bytes/op: 2552.35 miss_ratio: 0.0440566 max_rss_mb: 241.984

233MB 1thread new.hyper -> kops/s: 62.792 io_bytes/op: 2549.79 miss_ratio: 0.044043 max_rss_mb: 241.922

233MB 1thread base -> kops/s: 1.197 io_bytes/op: 2.75173e+06 miss_ratio: 0.103093 max_rss_mb: 241.559

233MB 1thread new -> kops/s: 1.199 io_bytes/op: 2.73723e+06 miss_ratio: 0.10305 max_rss_mb: 240.93

233MB 32thread base.hyper -> kops/s: 1298.69 io_bytes/op: 2539.12 miss_ratio: 0.0440307 max_rss_mb: 371.418

233MB 32thread new.hyper -> kops/s: 1421.35 io_bytes/op: 2538.75 miss_ratio: 0.0440307 max_rss_mb: 347.273

233MB 32thread base -> kops/s: 9.693 io_bytes/op: 2.77304e+06 miss_ratio: 0.103745 max_rss_mb: 569.691

233MB 32thread new -> kops/s: 9.75 io_bytes/op: 2.77559e+06 miss_ratio: 0.103798 max_rss_mb: 552.82

1597MB 1thread base.hyper -> kops/s: 58.607 io_bytes/op: 1449.14 miss_ratio: 0.0249324 max_rss_mb: 1583.55

1597MB 1thread new.hyper -> kops/s: 69.6 io_bytes/op: 1434.89 miss_ratio: 0.0247167 max_rss_mb: 1584.02

1597MB 1thread base -> kops/s: 60.478 io_bytes/op: 1421.28 miss_ratio: 0.024452 max_rss_mb: 1589.45

1597MB 1thread new -> kops/s: 63.973 io_bytes/op: 1416.07 miss_ratio: 0.0243766 max_rss_mb: 1589.24

1597MB 32thread base.hyper -> kops/s: 1436.2 io_bytes/op: 1357.93 miss_ratio: 0.0235353 max_rss_mb: 1692.92

1597MB 32thread new.hyper -> kops/s: 1605.03 io_bytes/op: 1358.04 miss_ratio: 0.023538 max_rss_mb: 1702.78

1597MB 32thread base -> kops/s: 280.059 io_bytes/op: 1350.34 miss_ratio: 0.023289 max_rss_mb: 1675.36

1597MB 32thread new -> kops/s: 283.125 io_bytes/op: 1351.05 miss_ratio: 0.0232797 max_rss_mb: 1703.83

Almost uniformly improving over base revision, especially for hot paths with HyperClockCache, up to 12% higher throughput seen (1597MB, 32thread, hyper). The improvement for that is likely coming from much simplified code for providing context for secondary cache promotion (CreateCallback/CreateContext), and possibly from less branching in block_based_table_reader. And likely a small improvement from not reconstituting key for DeleterFn.

Reviewed By: anand1976

Differential Revision: D42417818

Pulled By: pdillinger

fbshipit-source-id: f86bfdd584dce27c028b151ba56818ad14f7a432

|

|

Summary:

The change to `make_unique<char[]>` in https://github.com/facebook/rocksdb/issues/10810 inadvertently started initializing data in Arena blocks, which could lead to increased memory use due to (at least on our implementation) force-mapping pages as a result. This change goes back to `new char[]` while keeping all the other good parts of https://github.com/facebook/rocksdb/issues/10810.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/11012

Test Plan: unit test added (fails on Linux before fix)

Reviewed By: anand1976

Differential Revision: D41658893

Pulled By: pdillinger

fbshipit-source-id: 267b7dccfadaeeb1be767d43c602a6abb0e71cd0

|

|

(#10893)

Summary:

**Context/Summary:**

Run the following to format

```

find ./examples -iname *.h -o -iname *.cc | xargs clang-format -i

find ./memory -iname *.h -o -iname *.cc | xargs clang-format -i

find ./memtable -iname *.h -o -iname *.cc | xargs clang-format -i

```

**Test**

- Manual inspection to ensure changes are cosmetic only

- CI

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10893

Reviewed By: jay-zhuang

Differential Revision: D40779187

Pulled By: hx235

fbshipit-source-id: 529cbb0f0fbd698d95817e8c42fe3ce32254d9b0

|

|

Summary:

Instead of existing calls to ps from gnu_parallel, call a new wrapper that does ps, looks for unit test like processes, and uses pstack or gdb to print thread stack traces. Also, using `ps -wwf` instead of `ps -wf` ensures output is not cut off.

For security, CircleCI runs with security restrictions on ptrace (/proc/sys/kernel/yama/ptrace_scope = 1), and this change adds a work-around to `InstallStackTraceHandler()` (only used by testing tools) to allow any process from the same user to debug it. (I've also touched >100 files to ensure all the unit tests call this function.)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10828

Test Plan: local manual + temporary infinite loop in a unit test to observe in CircleCI

Reviewed By: hx235

Differential Revision: D40447634

Pulled By: pdillinger

fbshipit-source-id: 718a4c4a5b54fa0f9af2d01a446162b45e5e84e1

|

|

Summary:

The motivation for this change is a planned feature (related to HyperClockCache) that will depend on a large array that can essentially grow automatically, up to some bound, without the pointer address changing and with guaranteed zero-initialization of the data. Anonymous mmaps provide such functionality, and this change provides an internal API for that.

The other existing use of anonymous mmap in RocksDB is for allocating in huge pages. That code and other related Arena code used some awkward non-RAII and pre-C++11 idioms, so I cleaned up much of that as well, with RAII, move semantics, constexpr, etc.

More specifcs:

* Minimize conditional compilation

* Add Windows support for anonymous mmaps

* Use std::deque instead of std::vector for more efficient bag

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10810

Test Plan: unit test added for new functionality

Reviewed By: riversand963

Differential Revision: D40347204

Pulled By: pdillinger

fbshipit-source-id: ca83fcc47e50fabf7595069380edd2954f4f879c

|

|

Summary:

RocksDB's `Cache` abstraction currently supports two priority levels for items: high (used for frequently accessed/highly valuable SST metablocks like index/filter blocks) and low (used for SST data blocks). Blobs are typically lower-value targets for caching than data blocks, since 1) with BlobDB, data blocks containing blob references conceptually form an index structure which has to be consulted before we can read the blob value, and 2) cached blobs represent only a single key-value, while cached data blocks generally contain multiple KVs. Since we would like to make it possible to use the same backing cache for the block cache and the blob cache, it would make sense to add a new, lower-than-low cache priority level (bottom level) for blobs so data blocks are prioritized over them.

This task is a part of https://github.com/facebook/rocksdb/issues/10156

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10461

Reviewed By: siying

Differential Revision: D38672823

Pulled By: ltamasi

fbshipit-source-id: 90cf7362036563d79891f47be2cc24b827482743

|

|

Summary:

This reverts commit 8d178090beff296f7ab93fa77f91177fe146c156

because of a clear performance regression seen in internal dashboard

https://fburl.com/unidash/tpz75iee

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10434

Reviewed By: ltamasi

Differential Revision: D38256373

Pulled By: pdillinger

fbshipit-source-id: 134aa00f50dd7b1bbe037c227884a351342ec44b

|

|

Summary:

RocksDB's `Cache` abstraction currently supports two priority levels for items: high (used for frequently accessed/highly valuable SST metablocks like index/filter blocks) and low (used for SST data blocks). Blobs are typically lower-value targets for caching than data blocks, since 1) with BlobDB, data blocks containing blob references conceptually form an index structure which has to be consulted before we can read the blob value, and 2) cached blobs represent only a single key-value, while cached data blocks generally contain multiple KVs. Since we would like to make it possible to use the same backing cache for the block cache and the blob cache, it would make sense to add a new, lower-than-low cache priority level (bottom level) for blobs so data blocks are prioritized over them.

This task is a part of https://github.com/facebook/rocksdb/issues/10156

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10309

Reviewed By: ltamasi

Differential Revision: D38211655

Pulled By: gangliao

fbshipit-source-id: 65ef33337db4d85277cc6f9782d67c421ad71dd5

|

|

Summary:

For regular db instance and secondary instance, we return error and refuse to open DB if Logger creation fails.

Our current code allows it, but it is really difficult to debug because

there will be no LOG files. The same for OPTIONS file, which will be explored in another PR.

Furthermore, Arena::AllocateAligned(size_t bytes, size_t huge_page_size, Logger* logger) has an

assertion as the following:

```cpp

#ifdef MAP_HUGETLB

if (huge_page_size > 0 && bytes > 0) {

assert(logger != nullptr);

}

#endif

```

It can be removed.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9984

Test Plan: make check

Reviewed By: jay-zhuang

Differential Revision: D36347754

Pulled By: riversand963

fbshipit-source-id: 529798c0511d2eaa2f0fd40cf7e61c4cbc6bc57e

|

|

Summary:

ROCKSDB_SUPPORT_THREAD_LOCAL definition has been removed.

`__thread`(#define) has been replaced with `thread_local`(C++ keyword) across the code base.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/10015

Reviewed By: siying

Differential Revision: D36485491

Pulled By: pdillinger

fbshipit-source-id: 6522d212514ee190b90b4e2750c80c7e34013c78

|

|

Summary:

When I enable hugepage on my box, unit test fails, this PR fixes this issue:

[ FAILED ] ArenaTest.ApproximateMemoryUsage (1 ms)

memory/arena_test.cc:127: Failure

Value of: arena.IsInInlineBlock()

Actual: true

Expected: false

arena.IsInInlineBlock() = 1

memory/arena_test.cc:127: Failure

Value of: arena.IsInInlineBlock()

Actual: true

Expected: false

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9317

Reviewed By: ajkr

Differential Revision: D36219813

fbshipit-source-id: 08d040d9f37ec4c16987e4150c2db876180d163d

|

|

Summary:

ToString() is created as some platform doesn't support std::to_string(). However, we've already used std::to_string() by mistake for 16 months (in db/db_info_dumper.cc). This commit just remove ToString().

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9955

Test Plan: Watch CI tests

Reviewed By: riversand963

Differential Revision: D36176799

fbshipit-source-id: bdb6dcd0e3a3ab96a1ac810f5d0188f684064471

|

|

Summary:

Especially after updating to C++17, I don't see a compelling case for

*requiring* any folly components in RocksDB. I was able to purge the existing

hard dependencies, and it can be quite difficult to strip out non-trivial components

from folly for use in RocksDB. (The prospect of doing that on F14 has changed

my mind on the best approach here.)

But this change creates an optional integration where we can plug in

components from folly at compile time, starting here with F14FastMap to replace

std::unordered_map when possible (probably no public APIs for example). I have

replaced the biggest CPU users of std::unordered_map with compile-time

pluggable UnorderedMap which will use F14FastMap when USE_FOLLY is set.

USE_FOLLY is always set in the Meta-internal buck build, and a simulation of

that is in the Makefile for public CI testing. A full folly build is not needed, but

checking out the full folly repo is much simpler for getting the dependency,

and anything else we might want to optionally integrate in the future.

Some picky details:

* I don't think the distributed mutex stuff is actually used, so it was easy to remove.

* I implemented an alternative to `folly::constexpr_log2` (which is much easier

in C++17 than C++11) so that I could pull out the hard dependencies on

`ConstexprMath.h`

* I had to add noexcept move constructors/operators to some types to make

F14's complainUnlessNothrowMoveAndDestroy check happy, and I added a

macro to make that easier in some common cases.

* Updated Meta-internal buck build to use folly F14Map (always)

No updates to HISTORY.md nor INSTALL.md as this is not (yet?) considered a

production integration for open source users.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9546

Test Plan:

CircleCI tests updated so that a couple of them use folly.

Most internal unit & stress/crash tests updated to use Meta-internal latest folly.

(Note: they should probably use buck but they currently use Makefile.)

Example performance improvement: when filter partitions are pinned in cache,

they are tracked by PartitionedFilterBlockReader::filter_map_ and we can build

a test that exercises that heavily. Build DB with

```

TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=30000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -partition_index_and_filters

```

and test with (simultaneous runs with & without folly, ~20 times each to see

convergence)

```

TEST_TMPDIR=/dev/shm/rocksdb ./db_bench_folly -readonly -use_existing_db -benchmarks=readrandom -num=10000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -partition_index_and_filters -duration=40 -pin_l0_filter_and_index_blocks_in_cache

```

Average ops/s no folly: 26229.2

Average ops/s with folly: 26853.3 (+2.4%)

Reviewed By: ajkr

Differential Revision: D34181736

Pulled By: pdillinger

fbshipit-source-id: ffa6ad5104c2880321d8a1aa7187e00ab0d02e94

|

|

Summary:

This fix addresses https://github.com/facebook/rocksdb/issues/9299.

If attempting to create a new object via the ObjectRegistry and a factory is not found, the ObjectRegistry will return a "NotSupported" status. This is the same behavior as previously.

If the factory is found but could not successfully create the object, an "InvalidArgument" status is returned. If the factory returned a reason why (in the errmsg), this message will be in the returned status.

In practice, there are two options in the ConfigOptions that control how these errors are propagated:

- If "ignore_unknown_options=true", then both InvalidArgument and NotSupported status codes will be swallowed internally. Both cases will return success

- If "ignore_unsupported_options=true", then having no factory will return success but a failing factory will return an error

- If both options are false, both cases (no and failing factory) will return errors.

In practice this likely only changes Customizable that may be partially available. For example, the JEMallocMemoryAllocator is a built-in allocator that is registered with the system but may not be compiled in. In this case, the status code for this allocator changed from NotSupported("JEMalloc not available") to InvalidArgumen("JEMalloc not available"). Other Customizable builtins/plugins would have the same semantics.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9333

Reviewed By: pdillinger

Differential Revision: D33517681

Pulled By: mrambacher

fbshipit-source-id: 8033052d4a4a7b88c2d9f90147b1b4467e51f6fd

|

|

Summary:

With memkind installed, either on a non-fb machine or using `ROCKSDB_NO_FBCODE=1`.

```

ROCKSDB_NO_FBCODE=1 make static_lib

```

Compilation failed due to unused variable warning treated as error. To bypass this, we need to

disable warning-as-error, which is not ideal.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9377

Test Plan: Repeat the above command, and rely on CI.

Reviewed By: ajkr

Differential Revision: D33543343

Pulled By: riversand963

fbshipit-source-id: 9a2790b38c00b8696c7910287f4ae5a9b394341d

|

|

old one to be deprecated in the future (#9362)

Summary:

In order to support old-style regex function registration, restored the original "Register<T>(string, Factory)" method using regular expressions. The PatternEntry methods were left in place but renamed to AddFactory. The goal is to allow for the deprecation of the original regex Registry method in an upcoming release.

Added modes to the PatternEntry kMatchZeroOrMore and kMatchAtLeastOne to match * or +, respectively (kMatchAtLeastOne was the original behavior).

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9362

Reviewed By: pdillinger

Differential Revision: D33432562

Pulled By: mrambacher

fbshipit-source-id: ed88ab3f9a2ad0d525c7bd1692873f9bb3209d02

|

|

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9340

Reviewed By: riversand963

Differential Revision: D33344152

Pulled By: mrambacher

fbshipit-source-id: 283637625b86c33497571c5f52cac3ddf910b6f3

|

|

Summary:

The tests rely on `CreateFromString()`, which returns

`Status::NotSupported()` when these tests attempt to create non-default

allocators.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/9318

Reviewed By: riversand963

Differential Revision: D33238405

Pulled By: ajkr

fbshipit-source-id: d2974e2341f1494f5f7cd07b73f2dbd0d502fc7c

|

|

Summary:

- Make MemoryAllocator and its implementations into a Customizable class.

- Added a "DefaultMemoryAllocator" which uses new and delete

- Added a "CountedMemoryAllocator" that counts the number of allocs and free

- Updated the existing tests to use these new allocators

- Changed the memkind allocator test into a generic test that can test the various allocators.

- Added tests for creating all of the allocators

- Added tests to verify/create the JemallocNodumpAllocator using its options.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8980

Reviewed By: zhichao-cao

Differential Revision: D32990403

Pulled By: mrambacher

fbshipit-source-id: 6fdfe8218c10dd8dfef34344a08201be1fa95c76

|

|

Summary:

It's always annoying to find a header does not include its own

dependencies and only works when included after other includes. This

change adds `make check-headers` which validates that each header can

be included at the top of a file. Some headers are excluded e.g. because

of platform or external dependencies.

rocksdb_namespace.h had to be re-worked slightly to enable checking for

failure to include it. (ROCKSDB_NAMESPACE is a valid namespace name.)

Fixes mostly involve adding and cleaning up #includes, but for

FileTraceWriter, a constructor was out-of-lined to make a forward

declaration sufficient.

This check is not currently run with `make check` but is added to

CircleCI build-linux-unity since that one is already relatively fast.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8893

Test Plan: existing tests and resolving issues detected by new check

Reviewed By: mrambacher

Differential Revision: D30823300

Pulled By: pdillinger

fbshipit-source-id: 9fff223944994c83c105e2e6496d24845dc8e572

|

|

Summary:

* Don't hardcode namespace rocksdb (use ROCKSDB_NAMESPACE)

* Don't #include <rocksdb/...> (use double quotes)

* Support putting NOCOMMIT (any case) in source code that should not be

committed/pushed in current state.

These will be run with `make check` and in GitHub actions

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8821

Test Plan: existing tests, manually try out new checks

Reviewed By: zhichao-cao

Differential Revision: D30791726

Pulled By: pdillinger

fbshipit-source-id: 399c883f312be24d9e55c58951d4013e18429d92

|

|

Summary:

Old typedef syntax is confusing

Most but not all changes with

perl -pi -e 's/typedef (.*) ([a-zA-Z0-9_]+);/using $2 = $1;/g' list_of_files

make format

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8751

Test Plan: existing

Reviewed By: zhichao-cao

Differential Revision: D30745277

Pulled By: pdillinger

fbshipit-source-id: 6f65f0631c3563382d43347896020413cc2366d9

|

|

Summary:

`strerror()` is not thread-safe, using `strerror_r()` instead. The API could be different on the different platforms, used the code from https://github.com/facebook/folly/blob/0deef031cb8aab76dc7e736f8b7c22d701d5f36b/folly/String.cpp#L457

Pull Request resolved: https://github.com/facebook/rocksdb/pull/8087

Reviewed By: mrambacher

Differential Revision: D27267151

Pulled By: jay-zhuang

fbshipit-source-id: 4b8856d1ec069d5f239b764750682c56e5be9ddb

|

|

Summary:

Fix a few typos and avoid a potential nullptr dereference.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/7592

Reviewed By: zhichao-cao

Differential Revision: D24582111

Pulled By: riversand963

fbshipit-source-id: 51e9260e8cad1fcdedd310c889f0faeec6efd937

|

|

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/7593

Reviewed By: zhichao-cao

Differential Revision: D24576218

Pulled By: riversand963

fbshipit-source-id: a3d77191362ca696ae9df643f97f4ab5b7ecff12

|

|

Summary:

Based on https://github.com/facebook/rocksdb/issues/6648 (CLA Signed), but heavily modified / extended:

* Implicit capture of this via [=] deprecated in C++20, and [=,this] not standard before C++20 -> now using explicit capture lists

* Implicit copy operator deprecated in gcc 9 -> add explicit '= default' definition

* std::random_shuffle deprecated in C++17 and removed in C++20 -> migrated to a replacement in RocksDB random.h API

* Add the ability to build with different std version though -DCMAKE_CXX_STANDARD=11/14/17/20 on the cmake command line

* Minimal rebuild flag of MSVC is deprecated and is forbidden with /std:c++latest (C++20)

* Added MSVC 2019 C++11 & MSVC 2019 C++20 in AppVeyor

* Added GCC 9 C++11 & GCC9 C++20 in Travis

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6697

Test Plan: make check and CI

Reviewed By: cheng-chang

Differential Revision: D21020318

Pulled By: pdillinger

fbshipit-source-id: 12311be5dbd8675a0e2c817f7ec50fa11c18ab91

|

|

Summary:

Two recent diffs can be autoformatted.

Also add HISTORY.md entry for https://github.com/facebook/rocksdb/pull/6214

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6685

Test Plan: Run all existing tests

Reviewed By: cheng-chang

Differential Revision: D20965780

fbshipit-source-id: 195b08d7849513d42fe14073112cd19fdda6af95

|

|

(#6214)

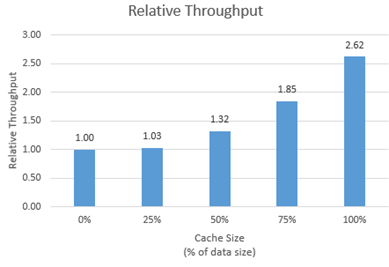

Summary:

New memory technologies are being developed by various hardware vendors (Intel DCPMM is one such technology currently available). These new memory types require different libraries for allocation and management (such as PMDK and memkind). The high capacities available make it possible to provision large caches (up to several TBs in size), beyond what is achievable with DRAM.

The new allocator provided in this PR uses the memkind library to allocate memory on different media.

**Performance**

We tested the new allocator using db_bench.

- For each test, we vary the size of the block cache (relative to the size of the uncompressed data in the database).

- The database is filled sequentially. Throughput is then measured with a readrandom benchmark.

- We use a uniform distribution as a worst-case scenario.

The plot shows throughput (ops/s) relative to a configuration with no block cache and default allocator.

For all tests, p99 latency is below 500 us.

**Changes**

- Add MemkindKmemAllocator

- Add --use_cache_memkind_kmem_allocator db_bench option (to create an LRU block cache with the new allocator)

- Add detection of memkind library with KMEM DAX support

- Add test for MemkindKmemAllocator

**Minimum Requirements**

- kernel 5.3.12

- ndctl v67 - https://github.com/pmem/ndctl

- memkind v1.10.0 - https://github.com/memkind/memkind

**Memory Configuration**

The allocator uses the MEMKIND_DAX_KMEM memory kind. Follow the instructions on[ memkind’s GitHub page](https://github.com/memkind/memkind) to set up NVDIMM memory accordingly.

Note on memory allocation with NVDIMM memory exposed as system memory.

- The MemkindKmemAllocator will only allocate from NVDIMM memory (using memkind_malloc with MEMKIND_DAX_KMEM kind).

- The default allocator is not restricted to RAM by default. Based on NUMA node latency, the kernel should allocate from local RAM preferentially, but it’s a kernel decision. numactl --preferred/--membind can be used to allocate preferentially/exclusively from the local RAM node.

**Usage**

When creating an LRU cache, pass a MemkindKmemAllocator object as argument.

For example (replace capacity with the desired value in bytes):

```

#include "rocksdb/cache.h"

#include "memory/memkind_kmem_allocator.h"

NewLRUCache(

capacity /*size_t*/,

6 /*cache_numshardbits*/,

false /*strict_capacity_limit*/,

false /*cache_high_pri_pool_ratio*/,

std::make_shared<MemkindKmemAllocator>());

```

Refer to [RocksDB’s block cache documentation](https://github.com/facebook/rocksdb/wiki/Block-Cache) to assign the LRU cache as block cache for a database.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6214

Reviewed By: cheng-chang

Differential Revision: D19292435

fbshipit-source-id: 7202f47b769e7722b539c86c2ffd669f64d7b4e1

|

|

Summary:

When dynamically linking two binaries together, different builds of RocksDB from two sources might cause errors. To provide a tool for user to solve the problem, the RocksDB namespace is changed to a flag which can be overridden in build time.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6433

Test Plan: Build release, all and jtest. Try to build with ROCKSDB_NAMESPACE with another flag.

Differential Revision: D19977691

fbshipit-source-id: aa7f2d0972e1c31d75339ac48478f34f6cfcfb3e

|

|

Summary:

For our default block cache, each additional entry has extra memory overhead. It include LRUHandle (72 bytes currently) and the cache key (two varint64, file id and offset). The usage is not negligible. For example for block_size=4k, the overhead accounts for an extra 2% memory usage for the cache. The patch charging the cache for the extra usage, reducing untracked memory usage outside block cache. The feature is enabled by default and can be disabled by passing kDontChargeCacheMetadata to the cache constructor.

This PR builds up on https://github.com/facebook/rocksdb/issues/4258

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5797

Test Plan:

- Existing tests are updated to either disable the feature when the test has too much dependency on the old way of accounting the usage or increasing the cache capacity to account for the additional charge of metadata.

- The Usage tests in cache_test.cc are augmented to test the cache usage under kFullChargeCacheMetadata.

Differential Revision: D17396833

Pulled By: maysamyabandeh

fbshipit-source-id: 7684ccb9f8a40ca595e4f5efcdb03623afea0c6f

|

|

Summary:

Use delete to disable automatic generated methods instead of private, and put the constructor together for more clear.This modification cause the unused field warning, so add unused attribute to disable this warning.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5009

Differential Revision: D17288733

fbshipit-source-id: 8a767ce096f185f1db01bd28fc88fef1cdd921f3

|

|

Summary:

The `#include "core_local.h"` was pulling in libgcc's `posix_memalign()`

declaration. That declaration specifies `throw()` whereas musl libc's

declaration does not. This was leading to the following compiler error

when using musl libc:

```

In file included from /go/src/github.com/cockroachdb/cockroach/c-deps/rocksdb/port/jemalloc_helper.h:26:0,

from /go/src/github.com/cockroachdb/cockroach/c-deps/rocksdb/util/jemalloc_nodump_allocator.h:11,

from /go/src/github.com/cockroachdb/cockroach/c-deps/rocksdb/util/jemalloc_nodump_allocator.cc:6:

/go/native/x86_64-unknown-linux-musl/jemalloc/include/jemalloc/jemalloc.h:63:29: error: declaration of 'int posix_memalign(void**, size_t, size_t) throw ()' has a different exception specifier

# define je_posix_memalign posix_memalign

^

/go/native/x86_64-unknown-linux-musl/jemalloc/include/jemalloc/jemalloc.h:63:29: note: from previous declaration 'int posix_memalign(void**, size_t, size_t)'

# define je_posix_memalign posix_memalign

^

/go/native/x86_64-unknown-linux-musl/jemalloc/include/jemalloc/jemalloc.h:202:38: note: in expansion of macro 'je_posix_memalign'

JEMALLOC_EXPORT int JEMALLOC_NOTHROW je_posix_memalign(void **memptr,

^~~~~~~~~~~~~~~~~

make[4]: *** [CMakeFiles/rocksdb.dir/util/jemalloc_nodump_allocator.cc.o] Error 1

```

Since `#include "core_local.h"` is not actually used, we can just remove

it. I verified that fixes the build.

There was a related PR here (https://github.com/facebook/rocksdb/issues/2188), although the problem description is

slightly different.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5583

Differential Revision: D16343227

fbshipit-source-id: 0386bc2b5fd55b2c3b5fba19382014efa52e44f8

|

|

Summary:

Many logging related source files are under util/. It will be more structured if they are together.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5387

Differential Revision: D15579036

Pulled By: siying

fbshipit-source-id: 3850134ed50b8c0bb40a0c8ae1f184fa4081303f

|

|

Summary:

Move arena, allocator, and memory tools under util to a separate memory/ directory.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5382

Differential Revision: D15564655

Pulled By: siying

fbshipit-source-id: 9cd6b5d0d3d52b39606e19221fa154596e5852a5

|